Practical Robotics and Computer Vision (BPC-PRP)

This is a complete lab documentations for the BPC-PRP course at FEEC, Brno University of Technology.

In this course studets are aiming to program robot to fealize maze escape task.

Authors

- Ing. Adam Ligocki, Ph.D.

- Ing. Petr Šopák

- Ing Jakub Minařík

Acknowledgments

This work was created with the support of project RP182401001 under the PPSŘ 2025 program.

Lectures

Overview

Week 1 - Course Introduction

- Course introductions

- Instructors

- Organization

- Assessment overview (tests and final exam)

Responsible: Ing. Adam Ligocki, Ph.D.

Week 2 - Linux OS, C++, CMake, Unit Tests

- Linux OS overview, command line interface, basic programs

- Compiling a simple program using GCC

- Simple CMake project

- Unit tests

Responsible: Ing. Jakub Minařík

Week 3 - Git

- Git basics

- Online Git services

- Code quality (formatting, static analysis, ...)

Responsible: Ing. Adam Ligocki, Ph.D.

Week 4 - ROS2 Basics

- Elementary concepts of ROS2

- RViz

Responsible: Ing. Jakub Minařík

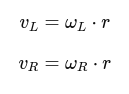

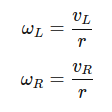

Week 5 - Kinematics & Odometry

- Differential chassis

- Wheel odometry

Responsible: Ing. Adam Ligocki, Ph.D.

Week 6 - Line Detection & Estimation

- Line sensor

- Differential sensor

- Line distance estimation

Responsible: Ing. Petr Šopák

Week 7 - Control Loop

- Line following principles

- Bang-bang controller

- P(I)D controller

Responsible: Ing. Adam Ligocki, Ph.D.

Week 8 - ROS2 Advanced

- DDS, node discovery

- launch system

- Visualization (markers, TFs, URDF, ...)

- Gazebo Responsible: Ing. Jakub Minařík

Week 9 - Robot Sensors & Architecture

- Understanding the range of robot sensors

- Deep dive into robot architecture

Responsible: Ing. Adam Ligocki, Ph.D.

Week 10 - Computer Vision 1

- CV overview

- Basic algorithms

- Image sensors

- Raspberry Pi & camera

Responsible: Ing. Petr Šopák

Week 11 - Computer Vision 2

- OpenCV usage

- ArUco detection

Responsible: Ing. Petr Šopák

Week 12 - Substitute Lecture

- To be announced (TBA)

Responsible: Ing. Adam Ligocki, Ph.D.

Exam Period - Final Exam

- Practical test (Maze escape task)

Laboratories

Overview

Lab 1 - Development Environment & First C++ Program

- Introduction to laboratory

- Linux Command Line Interface (CLI)

- C++ Review

- CLI compilation

- CLion and ROS 2 introduction

Responsible: Ing. Petr Šopák

Lab 2 - Project Workflow: Git, CMake & Team Project

- Git Basics and workflow

- Simple CMake project

- Unit tests

- Online repository

- Course project template

Responsible: Ing. Petr Šopák

Lab 3 - Data Capture & Visualization (ROS)

- ROS 2 in CLI

- Simple Node, Publisher, Subscriber

- RViz, Data Visualization

Responsible: Ing. Petr Šopák

Lab 4 - Motor, Kinematics & Gamepad

- Motor Control

- Forward and Inverse Kinematics

- Gamepad

Responsible: Ing. Jakub Minařík

Lab 5 - Line Estimation

- Line Sensor Usage

- Line Position Estimation

- Line Sensor Calibration

Responsible: Ing. Adam Ligocki, Ph.D.

Lab 6 - Line Following & PID

- Line Following Control Loop Implementation

Responsible: Ing. Petr Šopák

Lab 7 - Midterm Test (Line Following)

- Good Luck

Responsible: Ing. Adam Ligocki, Ph.D.

Lab 8 - LiDAR

- Understanding LiDAR data

- LiDAR Data Filtration

- Corridor Following Algorithm

Responsible: Ing. Petr Šopák

Lab 9 - Inertial Measurement Unit (IMU)

- Understanding IMU Data

- Orientation Estimation Using IMU

Responsible: Ing. Petr Šopák

Lab 10 - Camera Data Processing

- Understanding Camera Data

- ArUco Detection Library

Responsible: Ing. Petr Šopák

Lab 11 - Individual work

- Individual work

- Consultation

Responsible: Ing. Petr Šopák

Lab 12 - Midterm Test (Corridor Following)

- Good Luck!

Responsible: Ing. Adam Ligocki, Ph.D.

Final Exam - Maze Escape

- Good Luck!

Responsible: Ing. Adam Ligocki, Ph.D.

Lab 1 - Development Environment & First C++ Program

Responsible: Ing. Petr Šopák

This laboratory introduces the basic development environment used throughout the BPC-PRP course.

The goal is to get familiar with Linux, command-line tools, and to compile and run a simple C++ program.

By the end of this lab, you should be able to:

- navigate the Linux file system using the CLI,

- create and edit source files,

- compile and run a basic C++ program,

- understand the role of an IDE and ROS 2 in this course.

Linux & Command Line Basics (≈ 60 min)

Installation (optional)

To install Linux, please follow the Linux chapter.

Exercise

- Explore the system GUI.

- Open a terminal and navigate the file system.

- Practice basic CLI commands (see the Linux chapter):

- Check the current directory:

pwd - Create a directory:

mkdir <dir> - Enter a directory:

cd <dir> - Create a file:

touch <file> - List directory contents:

ls -la - Rename or move a file:

mv <old> <new> - Copy a file:

cp <src> <dst> - Remove a file:

rm <file> - Create/remove a directory:

mkdir <dir>,rm -r <dir>

- Check the current directory:

- Try a text editor:

nanoorvim

I installed vim and accidentally opened it. What now?

You can exit Vim with: press Esc, then hold Shift and press Z twice (Shift+Z+Z).More Info: https://www.vim.org/docs.php

More details about Linux will be introduced during the course.

First C++ Program – CLI Compilation (≈ 60 min)

Create a new project folder in your home directory and enter it.

Create a file main.cpp with the following content:

#include <iostream>

#define A 5

int sum(int a, int b) {

return a + b;

}

int main() {

std::cout << "My first C++ program" << std::endl;

int b = 10;

std::cout << "Sum result: " << sum(A, b) << std::endl;

return 0;

}

Compile the program using g++ (GCC C++ compiler):

g++ -o my_program main.cpp

Run the compiled binary:

./my_program

There are other alternatives, like Clang, LLVM, and many others.

TASK 1

- In your project folder, create an

includefolder. - In the

includefolder, create alib.hppfile and write a simple function in it. - Use the function from

lib.hppinmain.cpp. - Compile and run the program (tip: use

-I <folder>with g++ to specify the header search path).

TASK 2

- In the project folder, create

lib.cpp. - Move the function implementation from

lib.hpptolib.cpp; keep the function declaration inlib.hpp. - Compile and run the program (tip: you have to compile both

main.cppandlib.cpp). - Helper:

g++ -o <output_binary> <source_1.cpp source_2.cpp ...> -I <folder_with_headers> - Discuss the difference between preprocessing, compiling, and linking.

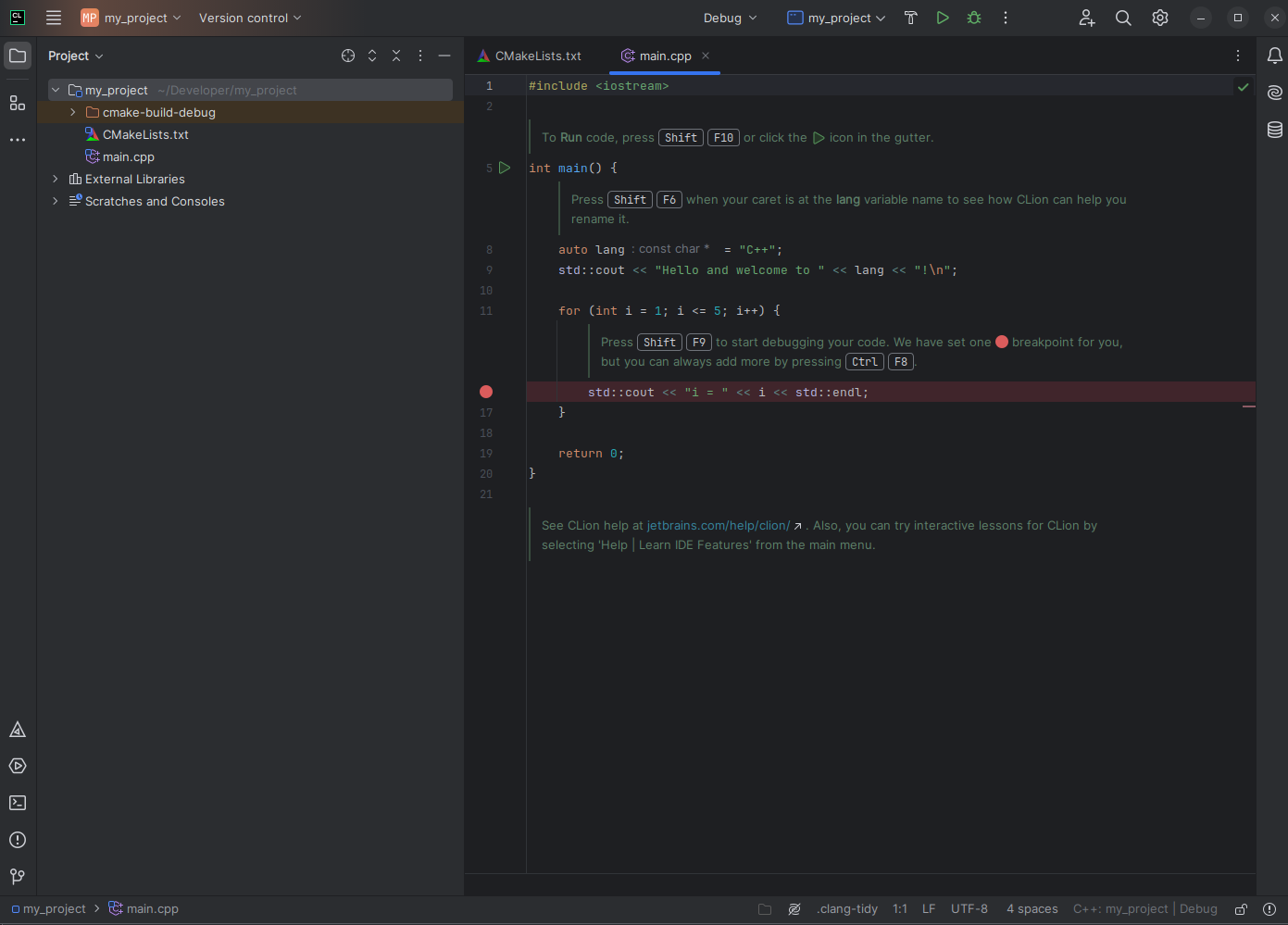

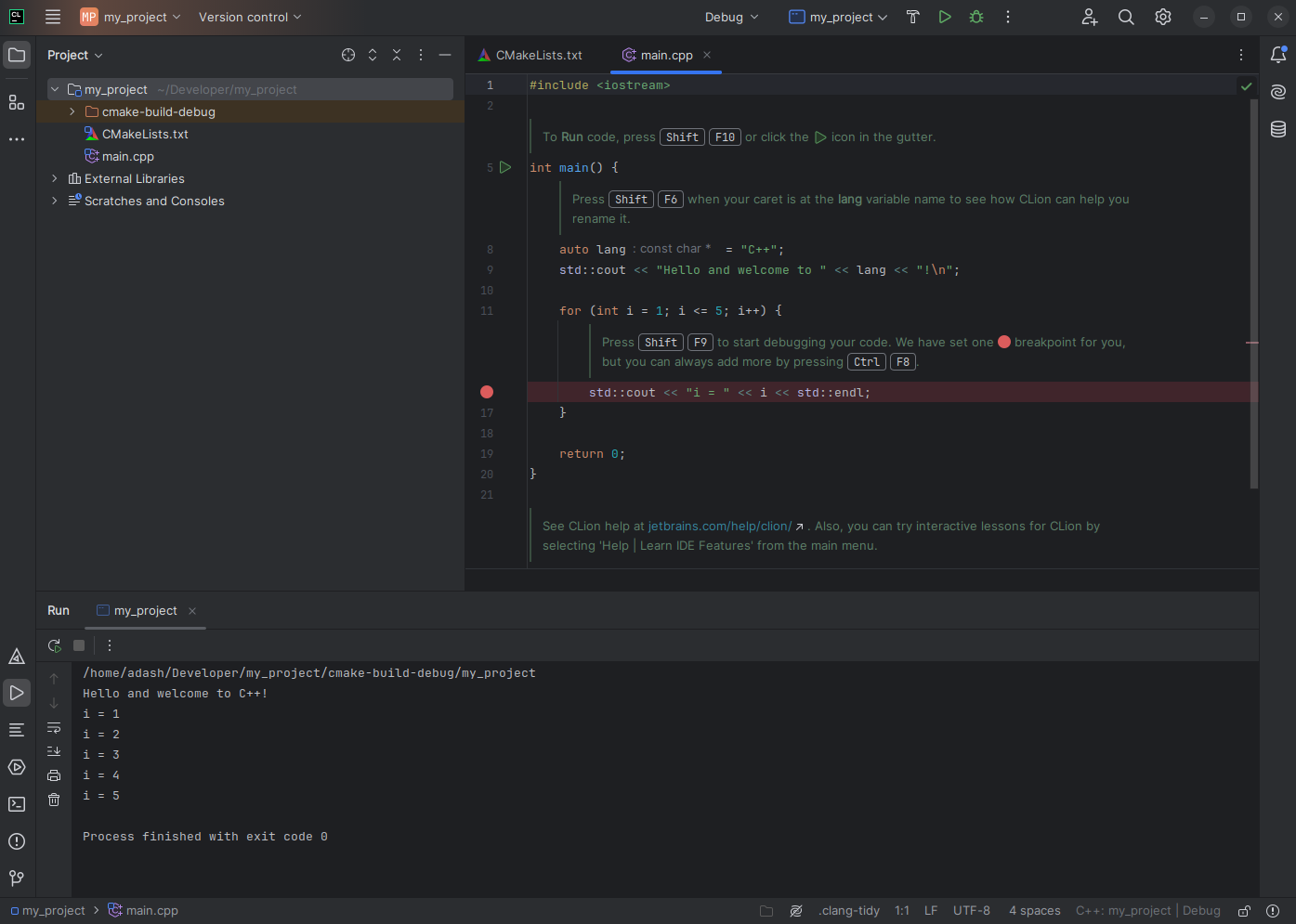

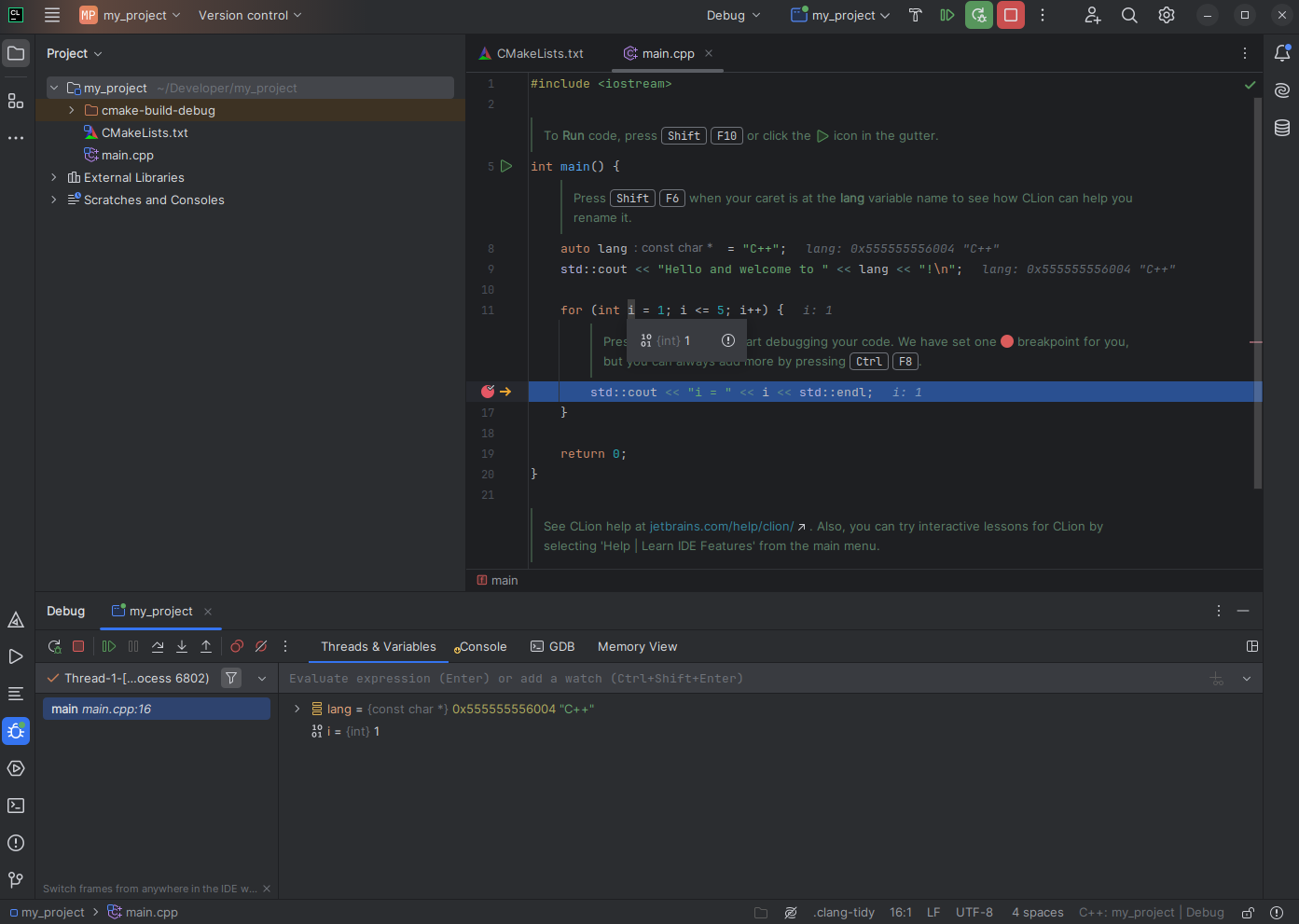

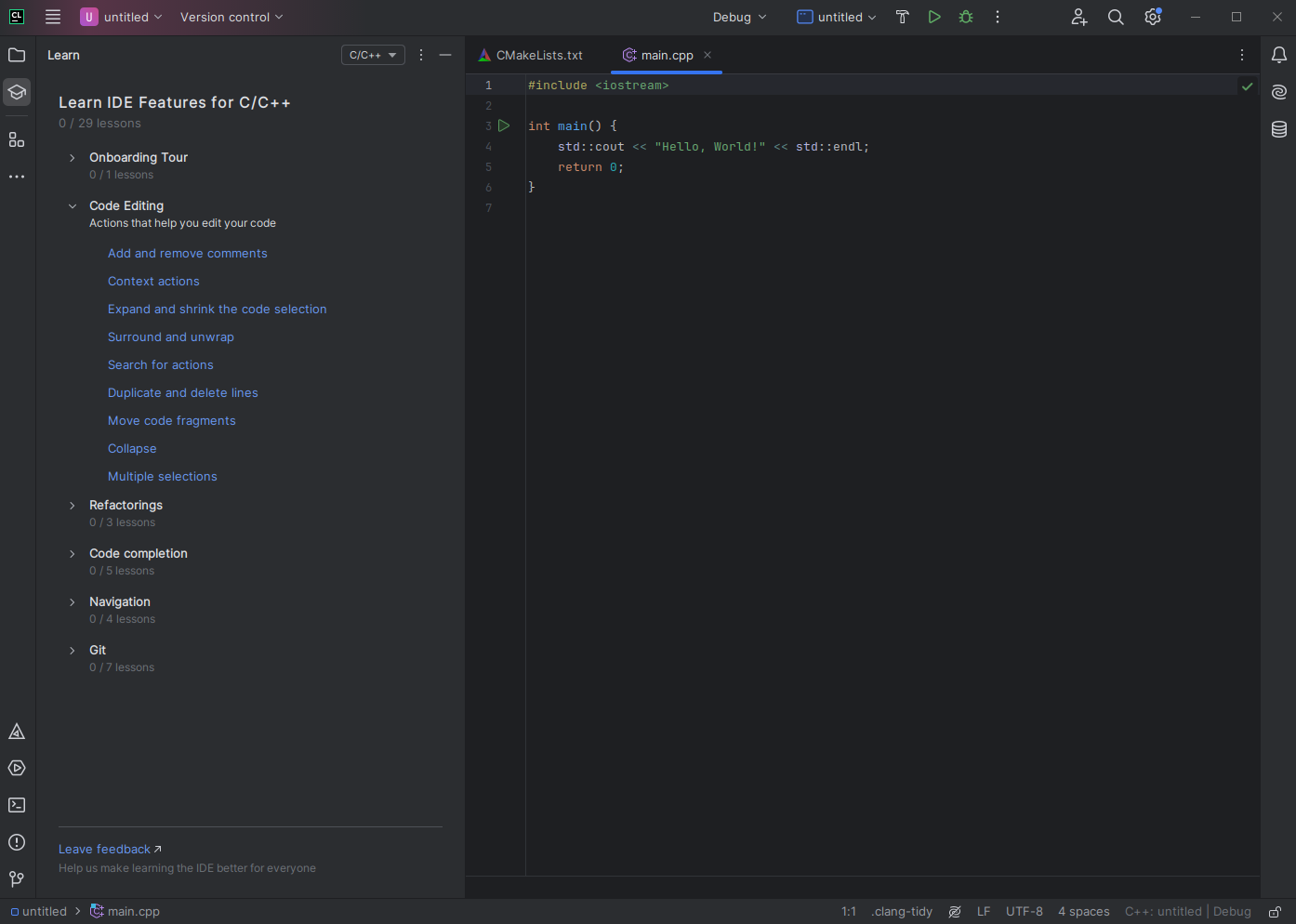

IDE Overview – CLion (≈ 15 min)

Installation

Install CLion using the Snap package manager:

sudo snap install --classic clion

Alternatively, download CLion from the official website and get familiar with it (see CLion IDE). By registering with your school email, you can obtain a free student license.

To learn how to control CLion, please take a look at the tutorial or the official docs.

TASK 3

- Learn how to control CLion

- Open the previously created C++ program (main.cpp) in CLion.

- Build and run the program using the IDE controls.

ROS 2 – Course Context (≈ 15 min)

ROS 2 (Robot Operating System 2) is a framework for building robotic systems. In this course, ROS 2 will be used later for:

- communication between software components,

- visualization (RViz, rqt),

simulation (Gazebo).

For installation and basic commands, see the ROS 2 chapter: ROS 2.

Lab 2 - Project Workflow: Git, CMake & Team Project

Responsible: Ing. Petr Šopák

This laboratory introduces the software development workflow used in the rest of the BPC-PRP course.

You will learn how to manage source code using Git, how C++ projects are structured, and how to build them using CMake.

The project created in this lab will serve as a base project for subsequent laboratories.

By the end of this lab, you should be able to:

- use Git for version control and teamwork,

- clone, commit, push, pull, and resolve merge conflicts,

- understand the structure of a C++ project,

- build a project using CMake,

- work with a shared repository in a team.

Git Basics & Team Workflow (≈ 75 min)

Before starting, read the Git tutorial.

Sign-up

Register on one of the following Git services:

This server will serve as your "origin" (remote repository) for the rest of the BPC-PRP course.

The instructors will have access to all your repositories, including their history, and can monitor your progress, including who, when, and how frequently commits were made.

Create a repository on the server to maintain your code throughout the course.

Cloning the Repository

HTTPS - GitHub Token

When cloning a repository via HTTPS, you cannot push changes using your username and password. Instead, you must use a generated GitHub token.

To generate a token, go to Profile picture (top-right corner) > Settings > Developer Settings > Personal Access Tokens > Tokens (classic) or click here. Your generated token will be shown only once, after which you can use it as a password when pushing changes via HTTPS until the token expires.

SSH - Setting a Different Key

You can generate an SSH key using the ssh-keygen command. It will prompt you for the file location/name and then for a passphrase. For lab use, set a passphrase. The default location is ~/.ssh.

When cloning a repository via SSH in the lab, you may encounter a problem with Git using the wrong SSH key.

You'll need to configure Git to use your generated key:

git config core.sshCommand "ssh -i ~/.ssh/<your_key>"

In this command, <your_key> refers to the private part of your generated key.

On GitHub, you can add the public part of your key to either a specific repository or your entire account.

-

To add a key to a project (repository level):

Go to Project > Settings > Deploy keys > Add deploy key, then check Allow write access if needed. -

To add a key to your GitHub account (global access):

Go to Profile picture (top-right corner) > Settings > SSH and GPG keys > New SSH key.

Team Task

As a team, complete the following steps:

- One team member creates a repository on the server.

- All team members clone the repository to their local machines.

- One team member creates a "Hello, World!" program locally, commits it, and pushes it to the origin.

- The rest of the team pulls the changes to their local repositories.

- Two team members intentionally create a conflict by modifying the same line of code simultaneously and attempting to push their changes to the server. The second member to push will receive an error from Git indicating a conflict.

- The team member who encounters the conflict resolves it and pushes the corrected version to the origin.

- All team members pull the updated version of the repository. Each member then creates their own

.hfile containing a function that prints their name. Everyone pushes their changes to the server. - One team member pulls the newly created

.hfiles and modifies the "Hello, World!" program to use all the newly created code. The changes are then pushed to the origin. - All team members pull the latest state of the repository.

Project Structure & CMake (≈ 60 min)

Before continuing, get familiar with CMake.

Now let's create a similar project from last lab, but using CMake.

- Determine your current location in the file system.

- Switch to your home directory.

- Create a new project folder.

- Inside this folder, create several subdirectories so that the structure looks like this (use the tree command to verify):

/MyProject

|--build

|--include

| \--MyProject

\--src

- Using any text editor (like

nanoorvim), create the following files in the project root:main.cpp,lib.cpp,lib.hpp, andCMakeLists.txt. - Move (do not copy) the

main.cppandlib.cppfiles into thesrcsubdirectory. - Move the

lib.hppfile into theinclude/MyProjectsubdirectory. - Move the

CMakeLists.txtfile into the root of the project folder.

Now your project should look like this:

/MyProject

|--build

|--CMakeLists.txt

|--include

| \--MyProject

| \--lib.hpp

\--src

|--lib.cpp

\--main.cpp

- Using a text editor, fill the

main.cpp,lib.cpp, andlib.hppfiles with the required code. - Using a text editor, fill the

CMakeLists.txtfile.

cmake_minimum_required(VERSION 3.10)

project(MyProject)

set(CMAKE_CXX_STANDARD 17)

include_directories(include/)

add_executable(my_program src/main.cpp src/lib.cpp)

Now compile the project. From the project folder, run:

cd my_project_dir # go to your project directory

mkdir -p build # create build folder

cd build # enter the build folder

cmake .. # configure; looks for CMakeLists.txt one level up

make # build program

./my_program # run program

Optional: Try to compile the program manually.

g++ <source1 source2 source3 ...> -I <include_directory> -o <output_binary>

- Delete project folder

BONUS TASK

Create the same project using the CLion IDE.

To learn how to control CLion, please take a look at the tutorial or the official docs.

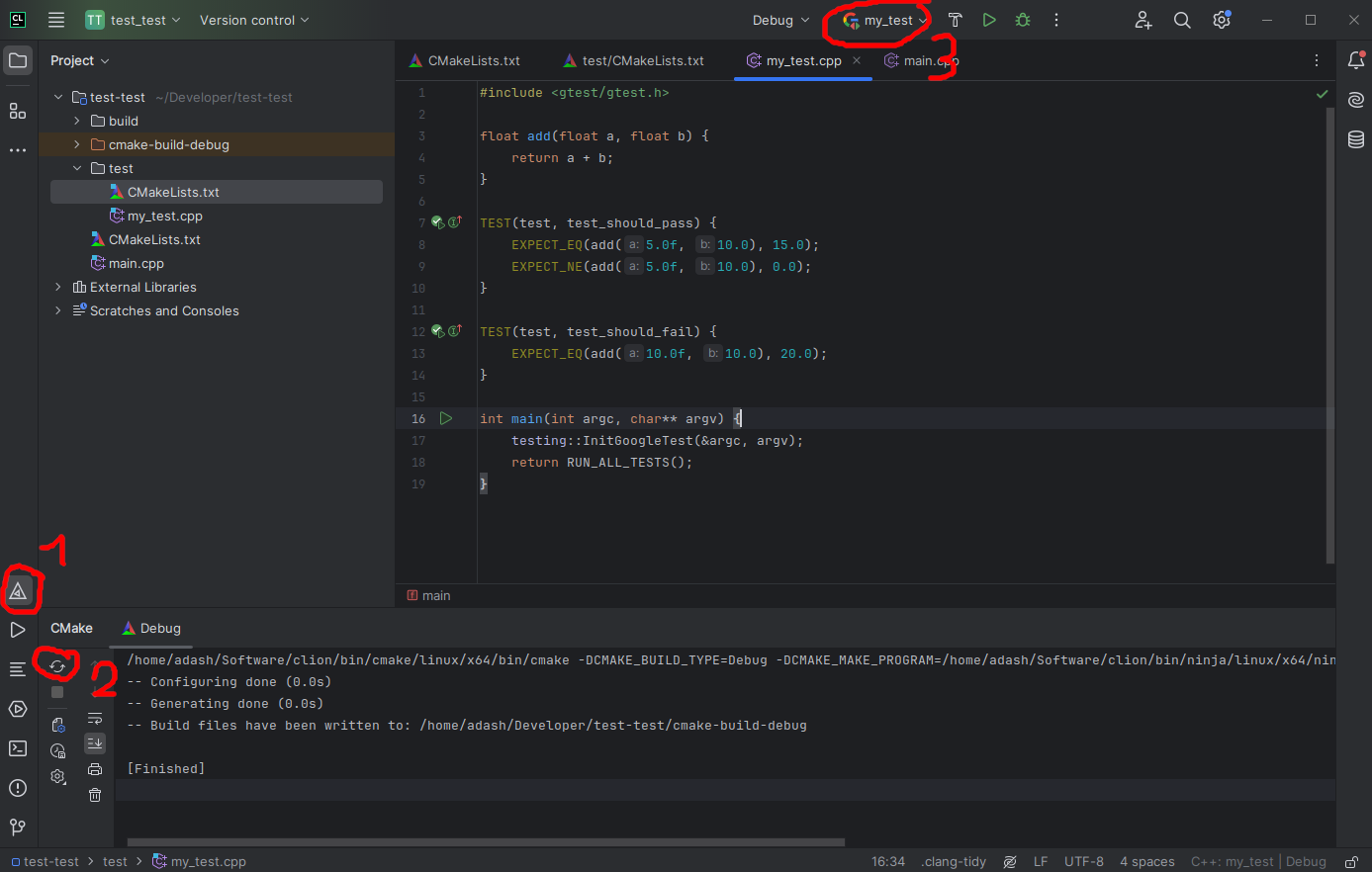

Unit Tests, GTest (30 min)

Unit tests are an effective way to develop software. Often called test‑driven development, the idea is: define the required functionality, write tests that cover the requirements, and then implement the code. When tests pass, the requirements are met.

On larger projects with many contributors and frequent changes, unit tests help catch regressions early. This supports Continuous Integration (CI).

There are many testing frameworks. In this course we will use GoogleTest (GTest), a common and well‑supported choice for C++.

GTest Installation

If there is no GTest installed on the system follow these instructions.

# install necessary packages

sudo apt update

sudo apt install cmake build-essential libgtest-dev

# compile gtest

cd /usr/src/gtest

sudo cmake .

sudo make

# install libs into system

sudo cp lib/*.a /usr/lib

Verify the libraries are in the system:

ls /usr/lib | grep gtest

# you should see:

# libgtest.a

# libgtest_main.a

Adding Unit Test to Project

In your project directory add the test folder.

/MyProject

|--include

|--src

\--test

Add the add_subdirectory(test) line at the end of CMakeLists.txt file.

Create CMakeLists.txt file in the test folder.

cmake_minimum_required(VERSION 3.10)

find_package(GTest REQUIRED)

include(GoogleTest)

enable_testing()

add_executable(my_test my_test.cpp)

target_link_libraries(my_test GTest::GTest GTest::Main)

gtest_discover_tests(my_test)

Create my_test.cpp file.

#include <gtest/gtest.h>

// Simple addition function for demonstration.

float add(float a, float b) {

return a + b;

}

TEST(AdditionTest, AddsPositiveNumbers) {

EXPECT_FLOAT_EQ(add(5.0f, 10.0f), 15.0f);

EXPECT_FLOAT_EQ(add(0.0f, 0.0f), 0.0f);

}

TEST(AdditionTest, AddsEqualNumbers) {

EXPECT_FLOAT_EQ(add(10.0f, 10.0f), 20.0f);

}

int main(int argc, char** argv) {

testing::InitGoogleTest(&argc, argv);

return RUN_ALL_TESTS();

}

In CLion, open the bottom console and run:

mkdir build && cd build

cmake ..

make

cd test

ctest

You should see the test output.

You can also run tests directly in CLion by reloading CMake; the test target will appear as an executable at the top of the window.

C++ Training (2h)

Take a look at the basic C++ tutorial and the more advanced multithreading tutorial.

Lab 3 - Data Capture & Visualization (ROS)

Responsible: Ing. Petr Šopák

Learning objectives

1) Fundamentals of ROS 2

- Setting Up a ROS 2 workspace (optional)

- Creating a custom ROS 2 node - implementing a basic publisher & subscriber

- Exploring essential ROS 2 CLI commands

- Utilizing visualization tools -

rqt_plotandrqt_graph

2) Implementing Basic Behavior for BPC-PRP robots

- Establishing connection to the robots

- Implementing IO node - Reading button inputs and controlling LEDs

BONUS: Advanced Visualizations

- Using RViz2 for graphical representation

- Creating basic graphical objects and defining their behavior

If you are using your own notebook, make sure to configure everything necessary in the Ubuntu environment! Refer to Ubuntu Environment Chapter for details.

Fundamentals of ROS 2 (Approx. 1 Hour)

Setting Up a ROS Workspace (5 min) - optional

Last week, you cloned a basic template that we will gradually extend with additional functionalities. The first step is to establish communication between the existing project and ROS 2.

There are many ways to set up a ROS project, but typically, a ROS workspace is created, which is a structured directory for managing multiple packages. However, for this course, we do not need a full ROS workspace, as we will be working with only one package. Let’s review the commands from Lab 1. Using the CLI, create a workspace folder structured as follows:

mkdir -p ~/ros_w/src

cd ~/ros_w

Next, copy the folders from previous labs into the src directory or re-clone the repository from Git (Lab 3). You can rename the template to something like "ros_package", but this is optional.

Finally, you need to compile the package and set up the environment:

colcon build

source install/setup.bash

Recap Notes: What is a ROS Workspace?

A ROS workspace is a structured environment for developing and managing multiple ROS packages. In this case, we created a workspace named ros_w (you can choose any name, but this follows common convention).

A ROS workspace allows for unified compilation and management of multiple packages. Each ROS package is a CMake project stored in the

srcfolder. Packages contain:

- Implementations of nodes (executable programs running in ROS),

- Definitions of messages (custom data structures used for communication),

- Configuration files,

- Other resources required for running ROS functionalities.

After (or before) setting up the workspace, always remember to source your environment before using ROS:

source ~/ros_w/install/setup.bashor add sourcing to your shell startup script

echo "source /opt/ros/<distro>/setup.bash" >> ~/.bashrc

Creating a custom node (55 min)

In this section, we will create a ROS 2 node that will publish and receive data (info). We will then visualize the data accordingly.

Required Tools:

Instructions:

-

Open a terminal and set the ROS_DOMAIN_ID to match the ID on your computer’s case:

export ROS_DOMAIN_ID=<robot_ID>This change is temporary and applies only to the current terminal session. If you want to make it permanent, you need to update your configuration file:

echo "export ROS_DOMAIN_ID=<robot_ID>" >> ~/.bashrc source ~/.bashrcCheck the contents of

~/.bashrc. The file runs at the start of each new Bash session and sets up your environment.cat ~/.bashrcTo verify the domain ID, use:

echo $ROS_DOMAIN_IDIf there are any issues with the .bashrc file, please let me know.

-

Open your CMake project in CLion or another Editor/IDE

-

Ensure that the IDE is launched from a terminal where the ROS environment is sourced

source /opt/ros/<distro>/setup.bash # We are using the Humble distribution

- Write the following code in

main.cpp:

#include <rclcpp/rclcpp.hpp>

#include "RosExampleClass.h"

int main(int argc, char* argv[]) {

rclcpp::init(argc, argv);

// Create an executor (for handling multiple nodes)

auto executor = std::make_shared<rclcpp::executors::MultiThreadedExecutor>();

// Create multiple nodes

auto node1 = std::make_shared<rclcpp::Node>("node1");

auto node2 = std::make_shared<rclcpp::Node>("node2");

// Create instances of RosExampleClass using the existing nodes

auto example_class1 = std::make_shared<RosExampleClass>(node1, "topic1", 1.0);

auto example_class2 = std::make_shared<RosExampleClass>(node2, "topic2", 2.0);

// Add nodes to the executor

executor->add_node(node1);

executor->add_node(node2);

// Run the executor (handles callbacks for both nodes)

executor->spin();

// Shutdown ROS 2

rclcpp::shutdown();

return 0;

}

- Create a header file in the

includedirectory to ensure the code runs properly. - Add the following code to the header file:

#pragma once

#include <iostream>

#include <string>

#include <rclcpp/rclcpp.hpp>

#include <std_msgs/msg/float32.hpp>

#include <chrono>

class RosExampleClass {

public:

// Constructor takes a shared_ptr to an existing node instead of creating one.

RosExampleClass(const rclcpp::Node::SharedPtr &node, const std::string &topic, double freq)

: node_(node), start_time_(node_->now()) {

// Initialize the publisher

publisher_ = node_->create_publisher<std_msgs::msg::Float32>(topic, 1);

// Initialize the subscriber

subscriber_ = node_->create_subscription<std_msgs::msg::Float32>(

topic, 1, std::bind(&RosExampleClass::subscriber_callback, this, std::placeholders::_1));

// Create a timer

timer_ = node_->create_wall_timer(

std::chrono::milliseconds(static_cast<int>(1000.0 / freq)),

std::bind(&RosExampleClass::timer_callback, this));

RCLCPP_INFO(node_->get_logger(), "Node setup complete for topic: %s", topic.c_str());

}

private:

void timer_callback() {

RCLCPP_INFO(node_->get_logger(), "Timer triggered. Publishing uptime...");

double uptime = (node_->now() - start_time_).seconds();

publish_message(uptime);

}

void subscriber_callback(const std_msgs::msg::Float32::SharedPtr msg) {

RCLCPP_INFO(node_->get_logger(), "Received: %f", msg->data);

}

void publish_message(float value_to_publish) {

auto msg = std_msgs::msg::Float32();

msg.data = value_to_publish;

publisher_->publish(msg);

RCLCPP_INFO(node_->get_logger(), "Published: %f", msg.data);

}

// Shared pointer to the main ROS node

rclcpp::Node::SharedPtr node_;

// Publisher, subscriber, and timer

rclcpp::Publisher<std_msgs::msg::Float32>::SharedPtr publisher_;

rclcpp::Subscription<std_msgs::msg::Float32>::SharedPtr subscriber_;

rclcpp::TimerBase::SharedPtr timer_;

// Start time for uptime calculation

rclcpp::Time start_time_;

};

At this point, you can compile and run your project. Alternatively, you can use the CLI:

colcon build --packages-select <package_name>

source install/setup.bash

ros2 run <package_name> <executable_file>

This will compile the ROS 2 workspace, load the compiled packages, and execute the program from the specified package.

TASK 1:

- Review the code – Try to understand what each part does and connect it with concepts from the lecture.

- Observe the program’s output in the terminal.

How to check published data

There are two main ways to analyze and visualize data in ROS 2 - using CLI commands in the terminal or ROS visualization tools.

1) Inspecting Published Data via CLI

In a new terminal (Don't forget to source the ROS environment!), you can inspect the data published to a specific topic:

ros2 topic echo <topic_name>

If you are unsure about the topic name, you can list all available topics:

ros2 topic list

Similar commands exist for nodes, services, and actions – refer to the documentation for more details.

2) Using ROS Visualization Tools

ROS 2 offers built-in tools for graphical visualization of data and system architecture. Real-Time Data Visualization - rqt_plot - allows you to graphically plot topic values in real-time:

ros2 run rqt_plot rqt_plot

In the GUI, enter

/<topic_name>/datainto the input field, click +, and configure the X and Y axes accordingly.

System Architecture Visualization - rqt_graph - displays the ROS 2 node connections and data flow within the system:

rqt_graph

When the system's architecture changes, simply refresh the visualization by clicking the refresh button.

TASK 2

Modify or extend the code to publish sine wave (or other mathematical function) values.

Don't forget to check the results using ROS 2 tools

(Hint: Include the std::sin)

(Bonus for fast finishers): Modify the code so that each node publishes different data, such as two distinct mathematical functions.

Implementing Basic Behavior for BPC-PRP robots (1 h)

In this section, we will get familiar with the PRP robot, learn how to connect to it, explore potential issues, and then write a basic input-output node for handling buttons and LEDs. Finally, we will experiment with these components.

Connecting to the Robot

The robot operates as an independent unit, meaning it has its own computing system (Raspberry Pi) running Ubuntu with an already installed ROS 2 program. Our goal is to connect to the robot and send instructions to control its behavior.

- First, power on the robot and connect to it using SSH. Ensure that you are on the same network as the robot.

ssh robot@prp-<color>

The password will be written on the classroom board. The robot may take about a minute to boot up. Please wait before attempting to connect.

- Once connected to the Robot:

- examine the system architecture to understand which ROS 2 nodes are running on the robot and what topics they publish or subscribe to.

- Additionally, check the important environment variables using:

env | grep ROS

The

ROS_DOMAIN_IDis particularly important. It is an identifier used by theDDS(Data Distribution Service), which serves as the middleware for communication in ROS 2. Only ROS 2 nodes with the same ROS_DOMAIN_ID can discover and communicate with each other.

-

(if it is necessary) Open a new terminal on your local machine (not on the robot) and change the

ROS_DOMAIN_IDto match the robot’s domain:export ROS_DOMAIN_ID=<robot_ID>This change is temporary and applies only to the current terminal session. If you want to make it permanent, you need to update your configuration file:

echo "export ROS_DOMAIN_ID=<robot_ID>" >> ~/.bashrc source ~/.bashrcAlternatively, you can change it by modifying the .bashrc file:

- Open the ~/.bashrc file in an editor, for example, using nano:

nano ~/.bashrc- Add/modify the following line at the end of the file:

export ROS_DOMAIN_ID=<your_ID>- Save the changes and close the editor (in nano, press CTRL+X, then Y to confirm saving, and Enter to finalize).

- To apply the changes, run the following command:

source ~/.bashrcTo verify the domain ID, use:

echo $ROS_DOMAIN_ID -

After successfully setting the

ROS_DOMAIN_ID, verify whether you can see the topics published by the robot from your local machine terminal.

Implementing the IO node

At this point, you should be able to interact with the robot—sending and receiving data. Now, let's set up the basic project structure where you will progressively add files.

Setting Up the Project Structure

- (Open CLion.) Create a

nodesdirectory inside both theincludeandsrcfolders of your CMake project. These directories will hold your node scripts for different components.

You can also create additional directories such as

algorithmsif needed. 2) Inside thenodesdirectories, create two files:

include/nodes/io_node.hpp(for declarations)src/nodes/io_node.cpp(for implementation)

- Open

CMakeLists.txt, review it, and modify it to ensure that your project can be built successfully.

!Remember to update CMakeLists.txt whenever you create new files!

Writing an IO Node for Buttons

- First, gather information about the published topic for buttons (

/bpc_prp_robot/buttons). Determine the message type and its structure using the following commands:

ros2 topic type <topic_name> # Get the type of the message

ros2 interface show <type_of_msg> # Show the structure of the message

Ensure that you are using the correct topic name.

- (Optional) To simplify implementation, create a header file named helper.hpp inside the include folder. Copy and paste the provided code snippet into this file. This helper file will assist you in working with topics efficiently.

#pragma once

#include <iostream>

#include <string>

static const int MAIN_LOOP_PERIOD_MS = 50;

namespace Topic {

const std::string buttons = "/bpc_prp_robot/buttons";

const std::string set_rgb_leds = "/bpc_prp_robot/rgb_leds";

};

namespace Frame {

const std::string origin = "origin";

const std::string robot = "robot";

const std::string lidar = "lidar";

};

TASK 3

- Using the previous tasks as a reference, complete the code for

io_node.hppandio_node.cppto retrieve button press data.Hint: Below is an example

.hppfile. You can use it for inspiration, but modifications are allowed based on your needs.#pragma once #include <rclcpp/rclcpp.hpp> #include <std_msgs/msg/u_int8.hpp> namespace nodes { class IoNode : public rclcpp::Node { public: // Constructor IoNode(); // Destructor (default) ~IoNode() override = default; // Function to retrieve the last pressed button value int get_button_pressed() const; private: // Variable to store the last received button press value int button_pressed_ = -1; // Subscriber for button press messages rclcpp::Subscription<std_msgs::msg::UInt8>::SharedPtr button_subscriber_; // Callback - preprocess received message void on_button_callback(const std_msgs::msg::UInt8::SharedPtr msg); }; }Here is an example of a

.cppfile. However, you need to complete it yourself before you can compile it.#include "my_project/nodes/io_node.hpp" namespace { IoNode::IoNode() { // ... } IoNode::get_button_pressed() const { // ... } // ... } > ``` - Run your program and check if the button press data is being received and processed as expected.

TASK 4

- Add Code for Controlling LEDs

Hints: - The robot subscribes to a topic for controlling LEDs. - Find out which message type is used for controlling LEDs. - Use the CLI to publish test messages and analyze their effect:

ros2 topic pub <led_topic> <message_type> <message_data> - Test LED Functionality with Simple Publishing

- Now, integrate button input with LED output:

- Pressing the first button → All LEDs turn on.

- Pressing the second button → LEDs cycle through colors in a your defined sequence.

- Pressing the third button → The intensity of each LED color component will change according to a mathematical function, with each color phase-shifted by one-third of the cycle.

BONUS: Advanced Visualizations (30 min)

Required Tools: rviz2

Official documentation: RViz2.

In this section, we will learn how to create visualizations in ROS 2 using RViz2. You should refer to the official RViz documentation and the marker tutorial to get a deeper understanding.

ROS 2 provides visualization messages via the visualization_msgs package. These messages allow rendering of various geometric shapes, arrows, lines, polylines, point clouds, text, and mesh grids.

Our objective will be to implement a class that visualizes a floating cube in 3D space while displaying its real-time position next to it.

-

Create the Header File

rviz_example_class.hpp:#pragma once #include <iostream> #include <memory> #include <string> #include <chrono> #include <cmath> #include <iomanip> #include <sstream> #include <rclcpp/rclcpp.hpp> #include <visualization_msgs/msg/marker_array.hpp> #define FORMAT std::fixed << std::setw(5) << std::showpos << std::setprecision(2) class RvizExampleClass : public rclcpp::Node { public: RvizExampleClass(const std::string& topic, double freq) : Node("rviz_example_node") // Node name in ROS 2 { // Create a timer with the specified frequency (Hz) timer_ = this->create_wall_timer( std::chrono::milliseconds(static_cast<int>(1000.0 / freq)), std::bind(&RvizExampleClass::timer_callback, this) ); // Create a publisher for MarkerArray messages markers_publisher_ = this->create_publisher<visualization_msgs::msg::MarkerArray>(topic, 10); } private: class Pose { public: Pose(float x, float y, float z) : x_{x}, y_{y}, z_{z} {} float x() const { return x_; } float y() const { return y_; } float z() const { return z_; } private: const float x_, y_, z_; }; void timer_callback() { auto time = this->now().seconds(); auto pose = Pose(sin(time), cos(time), 0.5 * sin(time * 3)); // Create a MarkerArray message visualization_msgs::msg::MarkerArray msg; msg.markers.push_back(make_cube_marker(pose)); msg.markers.push_back(make_text_marker(pose)); // Publish the marker array markers_publisher_->publish(msg); } visualization_msgs::msg::Marker make_cube_marker(const Pose& pose) { visualization_msgs::msg::Marker cube; // Coordinate system cube.header.frame_id = "map"; // In ROS 2, "map" or "odom" is recommended cube.header.stamp = this->now(); // Marker Type cube.type = visualization_msgs::msg::Marker::CUBE; cube.action = visualization_msgs::msg::Marker::ADD; cube.id = 0; // Position cube.pose.position.x = pose.x(); cube.pose.position.y = pose.y(); cube.pose.position.z = pose.z(); // Orientation (Quaternion) cube.pose.orientation.x = 0.0; cube.pose.orientation.y = 0.0; cube.pose.orientation.z = 0.0; cube.pose.orientation.w = 1.0; // Size cube.scale.x = cube.scale.y = cube.scale.z = 0.1; // Color cube.color.a = 1.0; // Alpha (visibility) cube.color.r = 0.0; cube.color.g = 1.0; cube.color.b = 0.0; return cube; } visualization_msgs::msg::Marker make_text_marker(const Pose& pose) { visualization_msgs::msg::Marker text; // Coordinate system text.header.frame_id = "map"; text.header.stamp = this->now(); // Marker Type text.type = visualization_msgs::msg::Marker::TEXT_VIEW_FACING; text.action = visualization_msgs::msg::Marker::ADD; text.id = 1; // Position (slightly above the cube) text.pose.position.x = pose.x(); text.pose.position.y = pose.y(); text.pose.position.z = pose.z() + 0.2; // Size text.scale.z = 0.1; // Text content std::stringstream stream; stream << "* Cool Cube *" << std::endl << " x: " << FORMAT << pose.x() << std::endl << " y: " << FORMAT << pose.y() << std::endl << " z: " << FORMAT << pose.z(); text.text = stream.str(); // Color text.color.a = 1.0; text.color.r = 1.0; text.color.g = 1.0; text.color.b = 0.0; return text; } // ROS 2 timer rclcpp::TimerBase::SharedPtr timer_; // ROS 2 publisher rclcpp::Publisher<visualization_msgs::msg::MarkerArray>::SharedPtr markers_publisher_; }; -

Add the Following Code to

main.cpp:#include "rviz_example_class.hpp" #include <rclcpp/rclcpp.hpp> int main(int argc, char** argv) { // Initialize ROS 2 rclcpp::init(argc, argv); // Create a node and run it auto node = std::make_shared<RvizExampleClass>("rviz_topic", 30.0); rclcpp::spin(node); // Shutdown ROS 2 rclcpp::shutdown(); return 0; } -

Build and run your project. Then open RViz2

rviz2 -

Add the Visualization Topic

- In RViz2, go to Add → By Topic

- Locate the created topic

rviz_topic - Select

MarkerArrayto display the cube and text

TASK BONUS:

- Check the code and RViz2 features

- Experiment with modifying the code to explore different visualization features.

The goal of this task is to familiarize yourself with RViz2. RViz2 will be used in future exercises, e.g., visualizing LiDAR data.

Lab 4 - Motor, Kinematics & Gamepad

Responsible: Ing. Jakub Minařík

Tasks

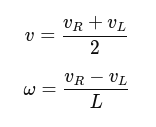

The end result of this lab should be an estimate of position in Cartesian coordinates with the origin at the start position after driving the robot.

1. Motor publisher implementation

- Develop a motor node that publishes wheel velocity commands to a ROS 2 topic (

/bpc_prp_robot/set_motor_speeds). - Ensure the node can send appropriate velocity commands to drive the robot’s wheels.

2. Encoder subscriber implementation

- Extend the motor node or create a separate encoder node to subscribe to an encoder topic for both wheels (

/bpc_prp_robot/encoders).

3. Robot parameter estimation

- Measure, estimate, or derive key robot parameters, such as:

- The relationship between commanded wheel velocity and actual wheel rotation speed.

- The relationship between wheel velocity, wheel radius, and chassis dimensions.

- The kinematic constraints affecting the robot’s movement.

- Motor control values are represented as unsigned 8-bit integers (0–255):

- A value of 127 corresponds to a neutral state (motors do not move).

- Values greater than 127 cause the wheels to rotate forward.

- Values less than 127 cause the wheels to rotate backward.

- The robot should execute the commanded speed for 1 second before stopping.

- The gearbox ratio is 1:48 and the motor likely has 3 pole pairs. It is recommended to test whether the number of ticks corresponds to one full wheel rotation.

- Test whether the number of encoder ticks corresponds to a full wheel rotation by counting the ticks per revolution.

- For additional information, refer to the motor datasheets and check the robot repository: https://github.com/Robotics-BUT/fenrir-project

4. Kinematics and odometry computation

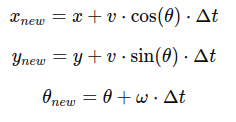

- Implement a class for kinematics and odometry calculations for a differential drive robot.

- Compute the robot pose (position and orientation) based on wheel velocities and time.

- Implement dead reckoning using wheel encoders.

5. Encoder data processing

- Develop a class for processing encoder data (or add to the kinematics/odometry class):

- Estimate the robot displacement and position.

- Apply correction mechanisms using encoder feedback to improve localization accuracy.

6. (Optional) Gamepad control

- Implement a gamepad node to manually control the robot movement.

- Handle relevant gamepad events and publish speeds for them.

Instructions for gamepad — SDL2

- Include SDL2:

#include <SDL2/SDL.h> - Initialize SDL2:

SDL_Init(SDL_INIT_VIDEO | SDL_INIT_GAMECONTROLLER) - Check if a joystick/gamepad is connected:

SDL_NumJoysticks() - Create a gamepad object:

SDL_GameControllerOpen(0) - Poll events in a time loop (e.g., via a ROS 2 timer):

- Create an event object:

SDL_Event - Poll events:

SDL_PollEvent() - Check event types, e.g.,

SDL_CONTROLLERBUTTONDOWN,SDL_CONTROLLERBUTTONUP,SDL_CONTROLLERAXISMOTION - Handle events and set speed and rotation

- Publish a ROS 2 message

- Create an event object:

- Close the gamepad object:

SDL_GameControllerClose()

Tests example

You can copy and create a test file from the example. You will probably need to rename the Kinematics class and its methods or correct parameter types as needed.

#include <gtest/gtest.h>

#include "../include/kinematics.hpp"

#include <cmath>

using namespace algorithms;

constexpr float ERROR = 0.001f;

constexpr float WHEEL_BASE = 0.12f;

constexpr float WHEEL_RADIUS = 0.033f;

constexpr float WHEEL_CIRCUMFERENCE = 2 * M_PI * WHEEL_RADIUS;

constexpr int32_t PULSES_PER_ROTATION = 550;

TEST(KinematicsTest, BackwardZeroVelocitySI) {

constexpr float linear = 0.0f;

constexpr float angular = 0.0f;

constexpr float expected_l = 0.0f;

constexpr float expected_r = 0.0f;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.inverse(RobotSpeed{linear, angular});

EXPECT_NEAR(result.l, expected_l, ERROR);

EXPECT_NEAR(result.r, expected_r, ERROR);

}

TEST(KinematicsTest, BackwardPositiveLinearVelocitySI) {

constexpr float linear = 1.0f;

constexpr float angular = 0.0f;

constexpr float expected_l = 1.0f / WHEEL_CIRCUMFERENCE * 2 * M_PI;

constexpr float expected_r = 1.0f / WHEEL_CIRCUMFERENCE * 2 * M_PI;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.inverse(RobotSpeed{linear, angular});

EXPECT_NEAR(result.l, expected_l, ERROR);

EXPECT_NEAR(result.r, expected_r, ERROR);

}

TEST(KinematicsTest, BackwardPositiveAngularVelocitySI) {

constexpr float linear = 1.0f;

constexpr float angular = 0.0f;

constexpr float expected_l = -(0.5f * WHEEL_BASE) / WHEEL_CIRCUMFERENCE * (2 * M_PI);

constexpr float expected_r = +(0.5f * WHEEL_BASE) / WHEEL_CIRCUMFERENCE * (2 * M_PI);

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.inverse(RobotSpeed{linear, angular});

EXPECT_NEAR(result.l, expected_l, ERROR);

EXPECT_NEAR(result.r, expected_r, ERROR);

}

TEST(KinematicsTest, ForwardZeroWheelSpeedSI) {

constexpr float wheel_l = 0;

constexpr float wheel_r = 0;

constexpr float expected_l = 0;

constexpr float expected_a= 0;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.forward(WheelSpeed {wheel_l,wheel_r});

EXPECT_NEAR(result.v, expected_l, ERROR);

EXPECT_NEAR(result.w, expected_a, ERROR);

}

TEST(KinematicsTest, ForwardEqualWheelSpeedsSI) {

constexpr float wheel_l = 1;

constexpr float wheel_r = 1;

constexpr float expected_l = WHEEL_RADIUS;

constexpr float expected_a= 0;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.forward(WheelSpeed {wheel_l,wheel_r});

EXPECT_NEAR(result.v, expected_l, ERROR);

EXPECT_NEAR(result.w, expected_a, ERROR);

}

TEST(KinematicsTest, ForwardOppositeWheelSpeedsSI) {

constexpr float wheel_l = -1;

constexpr float wheel_r = 1;

constexpr float expected_l = 0;

constexpr float expected_a= (WHEEL_RADIUS / (0.5 * WHEEL_BASE));

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto result = kin.forward(WheelSpeed {wheel_l,wheel_r});

EXPECT_NEAR(result.v, expected_l, ERROR);

EXPECT_NEAR(result.w, expected_a, ERROR);;

}

TEST(KinematicsTest, ForwardAndBackwardSI) {

constexpr float wheel_l = 1;

constexpr float wheel_r = -0.5;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto lin_ang = kin.forward(WheelSpeed {wheel_l,wheel_r});

auto result = kin.inverse(lin_ang);

EXPECT_NEAR(result.l, wheel_l, ERROR);

EXPECT_NEAR(result.r, wheel_r, ERROR);

}

TEST(KinematicsTest, ForwardAndBackwardEncoderDiff) {

constexpr int encoder_l = 0;

constexpr int encoder_r = 550;

Kinematics kin(WHEEL_RADIUS, WHEEL_BASE, PULSES_PER_ROTATION);

auto d_robot_pose = kin.forward(Encoders {encoder_l,encoder_r});

auto result = kin.inverse(d_robot_pose);

EXPECT_NEAR(result.l, encoder_l, 1);

EXPECT_NEAR(result.r, encoder_r, 1);

}

// Main function to run all tests

int main(int argc, char **argv) {

::testing::InitGoogleTest(&argc, argv);

return RUN_ALL_TESTS();

}

Kinematics Header Example

Only example of header - types need to be corrected. Instead of structures you can use, for example std::pair. Function working with coordinates is working with differences.

struct RobotSpeed{

float v; //linear

float w; //angluar

}

struct WheelSpeed{ //depends on you in what units

float l; //left

float r; //right

}

struct Encoders{

int l; //left

int r; //right

}

Coordinates{ //Cartesian coordinates

float x;

float y;

}

class Kinematics{

Kinematics(double wheel_radius, double wheel_base, int ticks_revolution);

RobotSpeed forward(WheelSpeed x) const;

WheelSpeed inverse(RobotSpeed x) const;

Coordinates forward(Encoders x) const;

Encoders inverse(Coordinates x) const;

}

Be Aware of Parallel Programming

When a variable is accessed by multiple threads—such as in the case of an encoder node, where a callback writes the encoder’s value to a variable while another thread reads it—you must use std::mutex or std::atomic to ensure thread safety. More about parallel computing in Multithreading.

Atomic variables

Atomic variables are thread save, but only simple types such as int, float. Name is from atomic operation/instruction - this type of instruction cannot be interrupted when executed, so it blocks the memory until done and other threads are waiting.

std::atomic<int> atomic_int;

Mutex

Mutex can be used to safely modify complex data structures such as std::vector or std::map.

A mutex works by locking a resource when a thread accesses it and unlocking it after the operation is complete. Other threads attempting to access the resource must wait until the mutex is released.

std::mutex mtx;

int shared_value = 0;

void increment()

{

std::lock_guard<std::mutex> lock(mtx);

shared_value++;

}

Lab 5 - Line Estimation

Responsible: Ing. Adam Ligocki, Ph.D.

Line sensor usage (1 h)

In this section, you will create a basic interface to the line sensor backend and inspect raw data.

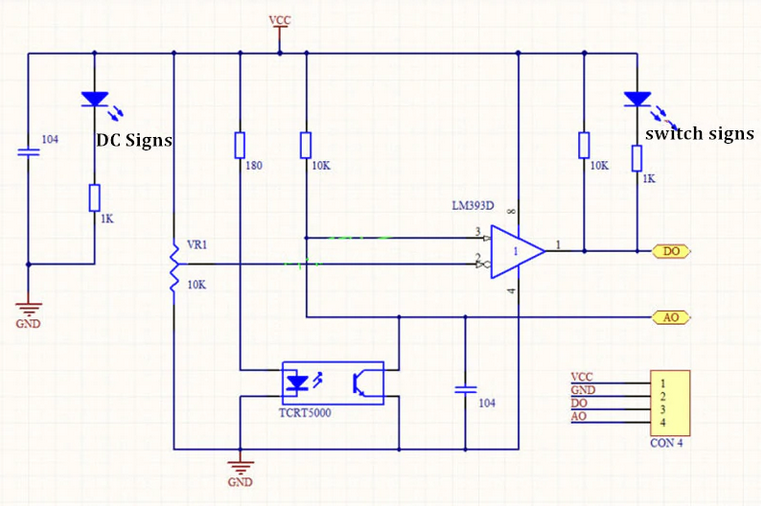

Line sensor explained

We use the TCRT5000 reflective line sensor.

It consists of an infrared LED and a phototransistor placed next to each other. The LED emits IR light; a reflective surface (e.g., a white line) bounces light back to the phototransistor. The amount of reflected light depends on the surface, so the sensor distinguishes light vs. dark areas. By reading the phototransistor output, your code can decide whether the sensor is over a reflective (light) line or a non‑reflective (dark) background.

Image source: https://osoyoo.com/2017/08/15/tcrt5000-infrared-line-tracking-sensor/

To interpret the output value, study the following characteristic curve.

- Over a dark line: little IR returns, the phototransistor is off, and the analog output voltage is high.

- Over a white (reflective) surface: more IR returns, the phototransistor conducts, and the analog output voltage is low (near ground on A0).

Discuss the slope of the curve and the usable range for your application.

Differential sensor usage

Consider using two line sensors in a differential configuration. Treat one sensor as positive and the other as negative. With a clever placement, summing their outputs gives a good estimate of the robot’s lateral position relative to the line.

What about the gap between sensors? How does it affect the line‑following behavior?

Line node implementation

Implement a LineNode class that receives data and encapsulates the line estimation for the rest of the program.

- Create new files according to your project’s conventions.

- Subscribe to the topic

/bpc_prp_robot/line_sensors. - Message type:

std_msgs::msg::UInt16MultiArray.

// Public API sketch; adapt to your project

enum class DiscreteLinePose {

LineOnLeft,

LineOnRight,

LineNone,

LineBoth,

};

class LineNode : public rclcpp::Node {

public:

LineNode();

~LineNode();

// Relative pose to line [m]

float get_continuous_line_pose() const;

DiscreteLinePose get_discrete_line_pose() const;

private:

rclcpp::Subscription<std_msgs::msg::UInt16MultiArray>::SharedPtr line_sensors_subscriber_;

void on_line_sensors_msg(const std_msgs::msg::UInt16MultiArray::SharedPtr& msg);

float estimate_continuous_line_pose(float left_value, float right_value);

DiscreteLinePose estimate_discrete_line_pose(float l_norm, float r_norm);

};

Run the program and print the measured values for verification.

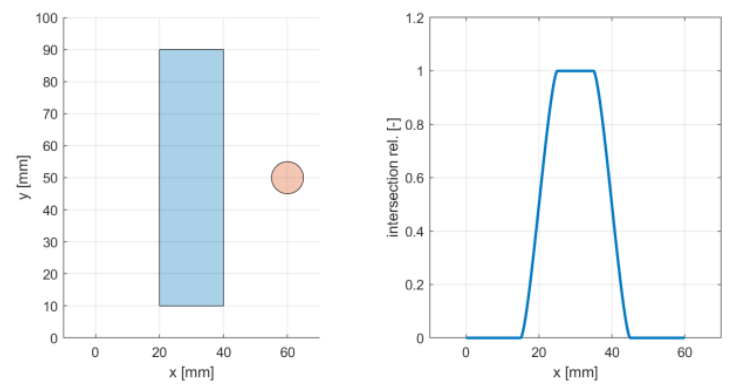

Line position estimation (1 h)

Now focus on estimating the line position. Create a class that encapsulates the algorithm. Inputs are left and right sensor values. Outputs are both the discrete and continuous position of the robot relative to the line.

Use test‑driven development (TDD): write tests first, then implement the algorithm.

// Minimal GTest example for a line estimator

#include <cstdint>

#include <gtest/gtest.h>

TEST(LineEstimator, BasicDiscreteEstimation) {

uint16_t left_value = 0;

uint16_t right_value = 1024;

auto result = LineEstimator::estimate_discrete(left_value, right_value);

EXPECT_EQ(result, /* expected pose */);

}

int main(int argc, char **argv) {

::testing::InitGoogleTest(&argc, argv);

return RUN_ALL_TESTS();

}

By separating the algorithm into its own class, you make testing easier than embedding the logic directly inside LineNode.

Discrete approach

Provide a method that returns a discrete position relative to the line.

class LineEstimator {

public:

static DiscreteLinePose estimate_discrete_line_pose(uint16_t left_val, uint16_t right_val);

};

Continuous approach

Do the same for the continuous case. Use raw sensor values as input and return a floating‑point lateral offset. Tip: scale the output to SI units [m].

class LineEstimator {

public:

static float estimate_continuous_line_pose(uint16_t left_val, uint16_t right_val);

};

Line sensor calibration and arrangement (1 h)

Now review the physical sensor setup. On each robot, sensors may be mounted slightly differently (position, rotation, height above ground, wiring, resistor values, ICs, etc.).

At the start of a run, calibrate the sensors so the algorithm receives comparable values.

How to calibrate the sensor

Capture the minimum and maximum response (min reflection vs. max reflection) and normalize the output so your algorithm always works in the same range.

auto calibrated = (raw - min_val) / (max_val - min_val);

Clamp the normalized value to [0.0, 1.0].

Sensor arrangement

There are several mounting options on the robot. Consider how sensor position, field of view, and dynamic range influence your line‑following algorithm.

- What about the dead zone between sensors?

- What if sensors are too close to each other?

- Should one sensor be amplified relative to the other?

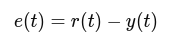

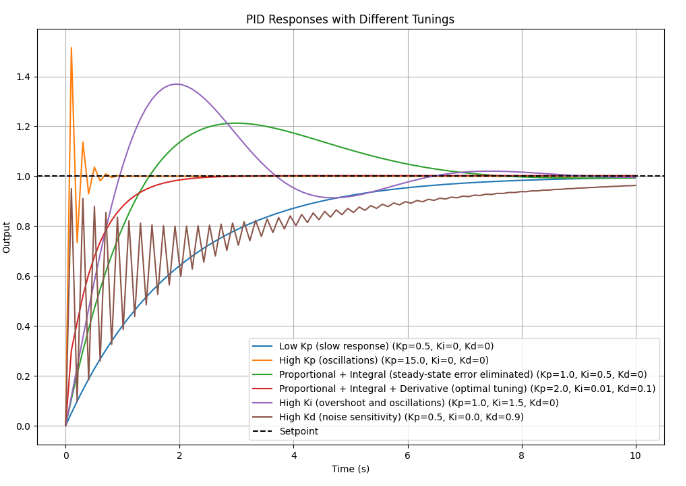

Lab 6 - Line Following & PID

Responsible: Ing. Petr Šopák

Learning objectives

- Bang-Bang Line Following (ON/OFF Control)

- Line Following with P-Control

- Line Following with PID Control

In previous lab sessions, you developed ROS 2 nodes for:

- Collecting data from reflection-based sensors

- Estimating the position of a line

- Controlling the robot's motion

Now, your task is to develop a strategy for line following – that is, responding to the estimated line position and ensuring the robot tracks the line correctly. You will start with the simplest approach (Bang-Bang control) and progressively refine it to implement PID regulation.

For more details, see the Line following chapter: Line following.

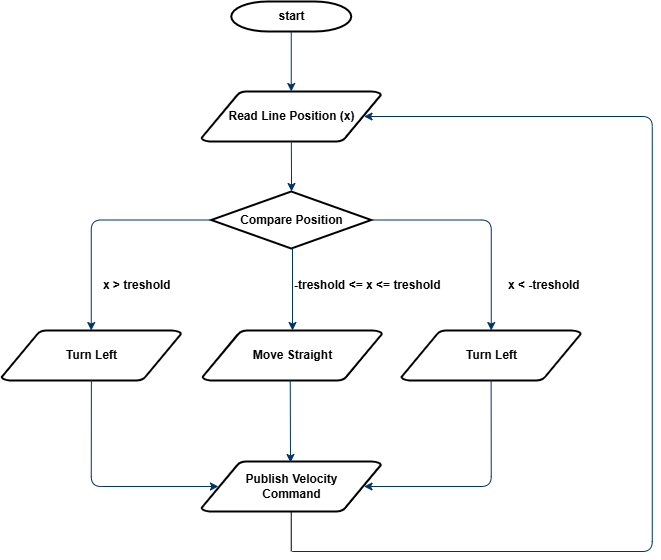

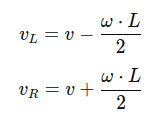

Bang-Bang Line Following (Approx. 1 hour)

Bang-Bang is the most basic control method. Instead of smoothly adjusting the speed, the robot makes hard, discrete decisions based on sensor readings. Think of it as a light switch – either ON or OFF:

- Line is detected on the left → Turn right

- Line is detected on the right → Turn left

- Line is centered → Move straight

This method can be implemented using either digital or analog sensor outputs. Since digital outputs already behave like ON/OFF signals, Bang-Bang logic is straightforward. However, in this lab, we will focus on the analog output, which requires setting a threshold to decide when the robot should turn.

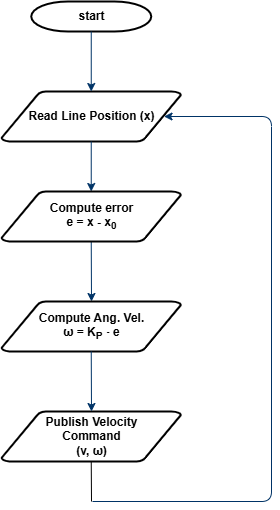

The entire algorithm is illustrated in Figure 1. The process consists of reading the estimated line position and comparing it to a user-defined threshold. Based on this comparison, the robot's movement is determined.

The flowchart provides a generalized structure for Bang-Bang control. However, the specific comparison logic and the velocity values sent to the wheels depend on your own implementation. It is up to you to decide how to structure the control logic in your code.

Figure 1: Flowchart for Bang-Bang Control

To fine-tune the performance, it is recommended to start with a higher threshold and gradually decrease it. Observe how the robot’s behavior changes and try to understand why this approach leads to better results.

TASK 1

- Project Structure:

- Inside the

srcandincludedirectories, create a new folder namedloops. - In this folder, create two files:

line_loop.cppandline_loop.hpp. - These files will define a ROS 2 node that implements a periodic control loop using a timer callback (e.g.,

line_loop_timer_callback()). - The loop should regularly read the estimated line position, compute the control action, and send appropriate speed commands to the robot's motors.

- Implement Bang-Bang Line Control based on the guidelines provided in the lab description.

- Experiment with different threshold values and observe how the robot behaves and analyze the advantages and limitations of Bang-Bang control.

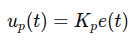

Line Following with P-Control (Approx. 1 hour)

Now you will refine your line-following strategy by implementing Proportional Control (P-Control). Unlike Bang-Bang control, which causes abrupt movements, P-Control allows the robot to adjust its movement smoothly based on how far the estimated line position deviates from the robot’s center. The goal is to achieve smoother and more stable tracking of the line.

Previously you implemented Bang-Bang control, which relied on strict ON/OFF decisions. This approach worked but led to oscillations and jerky movement, as the robot continuously switched between turning left and right. These issues make it difficult for the robot to follow curves or move efficiently at higher speeds.

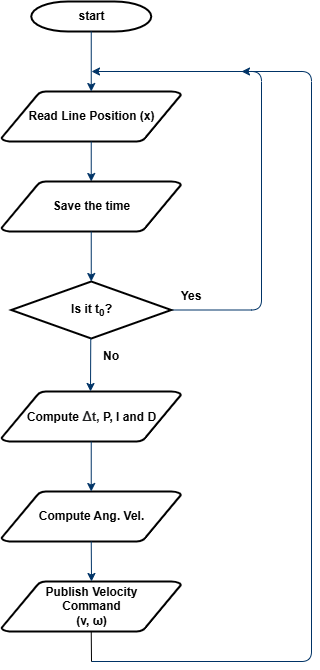

Proportional control solves this by introducing a continuous adjustment to the robot’s turning speed. Instead of making binary decisions, the angular velocity ω is determined using a proportional gain KP and the error e, which represents the difference between the estimated line position x and the robot's center x0:

$$ e = x - x_{0} $$

By multiplying this error by KP, we obtain the angular velocity:

$$ ω = K_{P} \cdot e $$

This means that when the robot is far from the center, it turns more sharply. When it is close to the center, it makes minor corrections. If the line is perfectly centered, the robot moves straight. The higher the proportional gain KP, the stronger the response to the error. However, if KP is too high, the robot may start oscillating.

The process of P-Control is illustrated in Figure 2. The robot reads the estimated position of the line, calculates the error, applies the proportional formula to determine angular velocity, and then sends this velocity to the motion control node, which executes the movement.

Figure 2: Flowchart for P-Control Line Follower

A key part of implementing P-Control is choosing the right value for KP. If KP is too small, the robot will react very slowly and may fail to follow the line accurately. If KP is too large, the robot might oscillate too much and become unstable. The best approach is to start with a low value of KP and gradually increase it, observing how the robot’s movement improves.

TASK 2

- Insert the provided

pid.hppfile into theinclude/algorithmsdirectory. This header defines a basic PID controller class, which you will use for both Task 2 (P-control) and Task 3 (full PID).

#pragma once

#include <iostream>

#include <chrono>

namespace algorithms {

class Pid {

public:

Pid(float kp, float ki, float kd)

: kp_(kp), ki_(ki), kd_(kd), prev_error_(0), integral_(0) {}

float step(float error, float dt) {

integral_ += error * dt;

float derivative = (error - prev_error_) / dt;

float output = kp_ * error + ki_ * integral_ + kd_ * derivative;

prev_error_ = error;

return output;

}

void reset() {

prev_error_ = 0;

integral_ = 0;

}

private:

float kp_;

float ki_;

float kd_;

float prev_error_;

float integral_;

};

}

- Reuse your

LineLoopclass from Task 1 and modify the control logic insideline_loop_timer_callback()to implement a Proportional controller. - Experiment with different values of the proportional gain KP and determine the most suitable value.

- Observe the performance and assess if further refinement is needed.

- Write simple unit tests for the

Pidclass in a separate file namedpid_test.cpp. Here's an example that tests the response of the P-controller to a unit step input:

#include "algorithms/pid.hpp"

#include <iostream>

#include <cassert>

#include <cmath>

using namespace algorithms;

bool nearly_equal(float a, float b, float eps = 1e-5f) {

return std::fabs(a - b) < eps;

}

// Unit step input (constant error = 1.0)

void test_unit_step() {

Pid pid(2.0f, 0.0f, 0.0f); // P-only

float dt = 0.1f;

float error = 1.0f;

for (int i = 0; i < 5; ++i) {

float output = pid.step(error, dt);

assert(nearly_equal(output, 2.0f));

}

std::cout << "[PASS]\n";

}

int main() {

test_unit_step();

std::cout << "All P-controller tests passed.\n";

return 0;

}

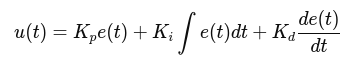

Line Following with PID Control (Approx. 1 hour)

In this part, you will refine your P-Control implementation by adding Integral (I) and Derivative (D) components, creating a PID controller. This will improve stability, reduce oscillations, and enhance the robot’s ability to follow curves accurately.

While P-Control adjusts the robot’s angular velocity based on the current error, it does not account for past errors or predict future corrections. This can result in oscillations or slow responses in certain situations. PID control solves these issues by incorporating two additional terms:

$$ ω = K_{P}e + K_{i}\int e dt + K_{d}\frac{de}{dt} $$

- $$K_{P}e$$ (Proportional term): Reacts to the current error.

- $$K_{i}\int e dt$$ (Integral term): Corrects accumulated past errors.

- $$K_{d}\frac{de}{dt}$$ (Derivative term): Predicts future errors and reduces overshooting.

The integral term helps eliminate steady-state errors, ensuring the robot remains centered over time. The derivative term improves responsiveness by counteracting rapid changes, preventing overshooting and oscillations.

The overall process is illustrated in Figure 3. The robot reads the estimated line position, computes the error, applies the PID formula, and sends the adjusted velocity command to the motion control node.

Figure 3: Flowchart for PID Control

Tuning KP, Ki, Kd is essential for optimal performance. If KP is too high, the robot may oscillate. If Ki is too high, the robot may overcorrect. If Kd is too high, the robot may become too sensitive to small errors. A common approach is to start with only KP, then add Kd, and finally add Ki to eliminate steady-state error.

For more information on PID control implementation and tuning methods, see PID.

TASK 3

- Just like in the previous tasks, extend your

LineLoopclass to implement full PID control using the providedPidclass. Also, don’t forget to extend your unit tests (pid_test.cpp) to verify the behavior of all PID components. - Choose a tuning method (either manual tuning or the Ziegler-Nichols method) and find the optimal values for KP, Ki, Kd.

- Observe the differences between PID control and the previous line-following methods. Analyze how each component affects the robot’s performance.

- (Optional) Implement output saturation (clamping). Real robots cannot turn infinitely fast. If your PID controller outputs a very large value (e.g. due to a sharp error), you should limit (saturate) it to a safe range.

- (Optional) Implement anti-windup. The integral term in a PID controller can sometimes accumulate too much (especially when the output is saturated), which leads to overshooting or instability. This is called integral windup. To prevent this, implement anti-windup, for example disabling integration when output is saturated or limiting the maximum integral value.

Lab 7 - Midterm Test (Line Following)

Responsible: Ing. Adam Ligocki, Ph.D.

Up to 50 points can be earned through two practical demonstrations during the semester.

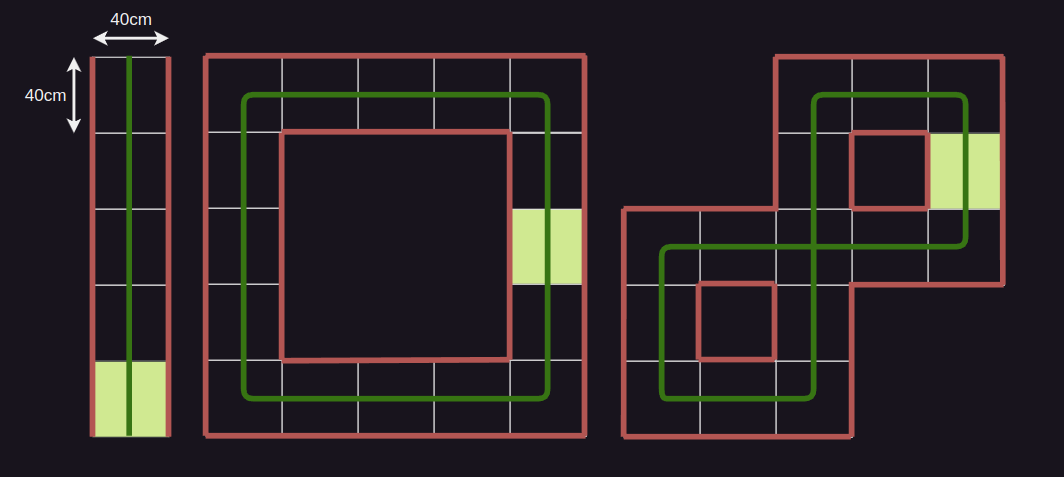

Week 8 — Line following (25 points)

Week 12 — Corridor following (25 points)

Line following rules

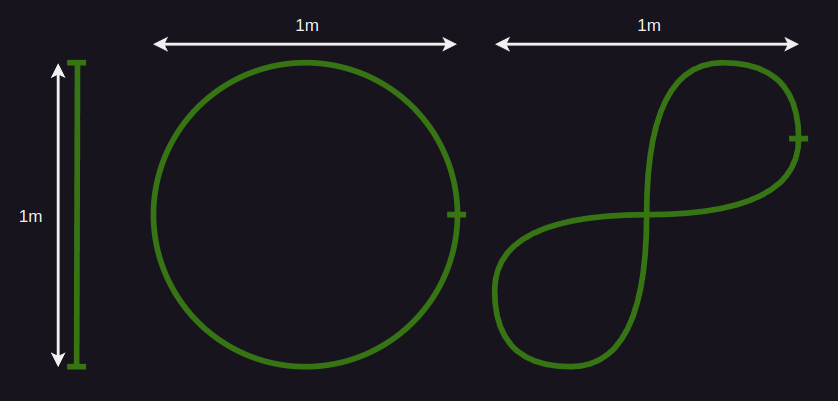

There are 3 tracks:

- Straight line (5 points)

- Simple loop (10 points)

- Complex loop (10 points)

To pass a track, the robot must follow the full length of the line.

If no part of the robot's body covers the line, the attempt fails.

Points are awarded only for completing the entire track.

Teams have 3 attempts per track, with a time limit of 3 minutes per attempt.

All 3 attempts must be performed during a single lab session.

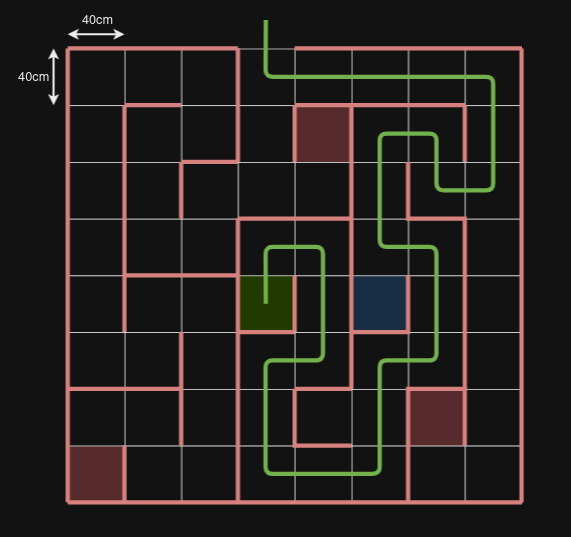

Test tracks

Lab 8 - Obstacle Detection & Corridor Following

Responsible: Ing. Petr Šopák

Learning objectives

1) Understanding and working with LiDAR and/or Ultrasonic Sensors

- Interpreting range data based on sensor principles

- Visualizing live sensor output in RViz2

2) Implementing basic Obstacle Detection

- Detecting nearby obstacles

- Implementing basic obstacle avoidance strategy

3) Implementing corridor following behavior

In the previous labs, you implemented line following — the robot follows a visible line on the floor. This method is useful in controlled environments, such as factory floors or predefined paths. However, line following relies on the presence of artificial markings and provides limited flexibility in more general environments.

In this and the next labs, you will begin working on corridor following, a more natural and scalable navigation strategy. Instead of relying on a line, the robot uses range sensors (LiDAR or ultrasonic) to perceive the environment and stay centered between two walls or obstacles, like navigating a hallway. This approach is closer to what real autonomous robots do in indoor spaces, such as offices, hospitals, or warehouses.

You will first learn how to interpret range data and detect nearby obstacles. Then, you will implement a simple reactive controller that enables the robot to stay within a corridor.

Understanding and working with LiDAR and/or ultrasonic sensors (Approx. 40 minutes)

In this part of the lab, you will get familiar with your chosen range sensor — either LiDAR or ultrasonic. You will explore how it measures distance, how the data is represented in ROS 2, and how to visualize it in RViz2. This will give you the foundation needed for obstacle detection and corridor following tasks in the rest of the lab.

For these labs, please choose one type of range sensor — either ultrasonic or LiDAR. You will work with the selected sensor throughout the exercises. If you later decide to switch to the other sensor or want to use both for comparison or improvement, feel free to do so. The instructions are written to support both sensor types.

A) Light Detection and Ranging (LiDAR) sensor

LiDAR sensors are commonly used in robotics to measure precise distances to surrounding objects. A LiDAR device emits rapid laser pulses and measures the time it takes for each pulse to bounce back from a surface. Using the known speed of light, it calculates the exact distance to each point. Most LiDARs used in mobile robots operate in 2D, scanning a horizontal plane around the robot to produce a range profile of the environment. This allows the robot to detect walls, obstacles, and open spaces with high accuracy and resolution.

When implementing the tasks, please refer to the official documentation of the sensor. You can find the RPLIDAR A1 datasheet here: RPLIDAR A1 datasheet

TASK 1 - A

- Explore the data provided by the sensor - Inspect the raw data in the terminal (Refer to the datasheet if needed to understand parameters)

Understand the meaning of the main fields in the message:

angle_min,angle_max,angle_increment,ranges[],range_max,range_min. - Visualize the LiDAR data in RViz2

- Launch RViz2 and add a LaserScan display (Add → By Topic → LaserScan)

- Set the correct topic name and

Fixed Frameaslidar - (Optional) Customize the display: point size, color, decay time, etc.

Don’t forget to launch RViz2 in a sourced terminal; otherwise topics will not be visible.

- Create a new

ROS 2node for your LiDAR processing- Create

lidar_node.hppandlidar_node.cppinnodesdirectories - In this node, subscribe to the LiDAR topic and process incoming data

- Create

- Think critically about the data

- Are all values in

ranges[]useful for your application?

TIP: LiDAR may return very small values (e.g. 0) or extremely large values (inf). These are usually best ignored.

- Do all directions matter for your robot’s task?

TIP: You can filter only specific angular sectors depending on what you need. (e.g. Front, Right, Left, Back)

- (Optional) Example skeleton for implementing sector-based LiDAR filtering. You may use this as inspiration or create your own version:

#include <cmath> #include <vector> #include <numeric> namespace algorithms { // Structure to store filtered average distances in key directions struct LidarFilterResults { float front; float back; float left; float right; }; class LidarFilter { public: LidarFilter() = default; LidarFilterResults apply_filter(std::vector<float> points, float angle_start, float angle_end) { // Create containers for values in different directions std::vector<float> left{}; std::vector<float> right{}; std::vector<float> front{}; std::vector<float> back{}; // TODO: Define how wide each directional sector should be (in radians) constexpr float angle_range = ; // Compute the angular step between each range reading auto angle_step = (angle_end - angle_start) / points.size(); for (size_t i = 0; i < points.size(); ++i) { auto angle = angle_start + i * angle_step; // TODO: Skip invalid (infinite) readings // TODO: Sort the value into the correct directional bin based on angle } // TODO: Return the average of each sector (basic mean filter) return LidarFilterResults{ .front = , .back = , .left = , .right = , }; } }; } - Are all values in

B) Ultrasonic sensors

Ultrasonic sensors are widely used in robotics for short-range obstacle detection. They work by emitting a high-frequency sound wave and measuring the time it takes for the echo to return after bouncing off an object. Unlike LiDAR, ultrasonic sensors typically measure in a narrow cone, and their readings can be affected by surface material, angle, or ambient noise. They are cost-effective, but require more filtering and careful placement to be reliable.

When implementing the tasks, please refer to the official documentation of the sensor. You can find the HY-SRF05 datasheet here: HY‑SRF05 datasheet

TASK 1 - B

- Explore the data provided by the sensor — Inspect the raw data in the terminal (refer to the datasheet if needed to understand parameters — min/max measurable range, FOV, etc.)

- Visualize the data in rqt (or RViz2 — use the Range display)

- Create a new ROS 2 node for processing ultrasonic data

- Create

ultrasonic_node.hppandultrasonic_node.cppinnodesdirectories - In this node, subscribe to the topic and process incoming data

- Create

- Think critically about the data

- What do the sensor values actually represent?

- Are the sensor readings stable and consistent over time?

TIP: Data is often affected by noise, reflections, and material properties. You may want to ignore extreme or invalid values. Consider applying filtering, such as a moving average or median filter

- If needed, implement a simple filtering algorithm to reduce noise or focus only on relevant angles (e.g. front, sides)

Implementing basic Obstacle Detection (Approx. 40 minutes)

Use your chosen sensor (LiDAR or ultrasonic) to detect whether an object is too close to the robot — for example, less than 0.30 m in front. If an obstacle is detected, the robot should stop and wait instead of continuing forward. This simple reactive behavior is an essential first step toward more advanced navigation strategies such as obstacle avoidance, corridor following, or autonomous path planning.

TASK 2

- Create a new ROS 2 node called

corridor_loopin theloopsdirectory. This node should be similar to theline_loopfrom the previous labs. In this node, you will gradually implement the entire functionality for Corridor Following - Use the sensor data from Task 1. Based on this data, implement a simple algorithm for Obstacle Detection:

- Retrieve the data from the sensors

- If the reading is below a threshold you define, this means the robot is close enough to detect the obstacle

- Test the obstacle detection to ensure the robot detects objects correctly when they are within the defined range.

- Create basic obstacle avoidance logic:

- Make the robot drive forward

- When an obstacle is detected, the robot must stop and not continue moving!

More advanced avoidance behaviors (e.g., turning) will be covered in the next lab.

Implementing corridor following behavior (Approx. 60 minutes)

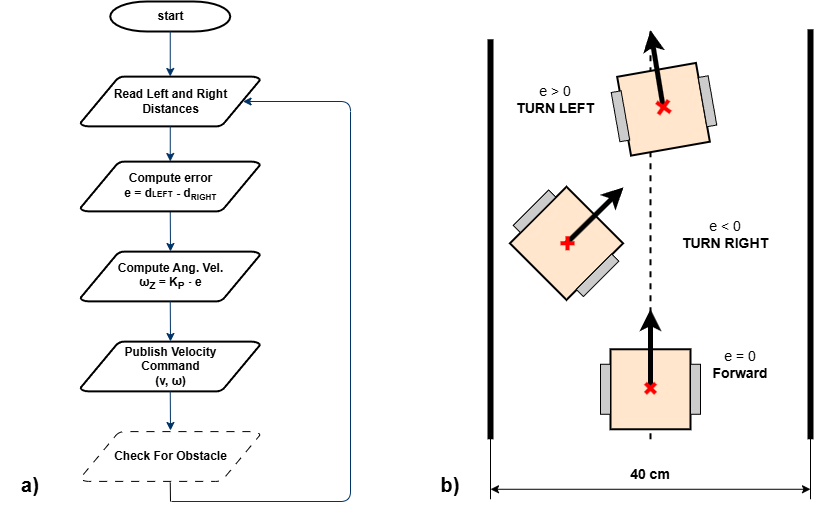

Corridor following allows the robot to stay centered between two walls by adjusting its heading based on distance measurements from both sides. In this task, you will use your sensor data (e.g. LiDAR or ultrasonic) to calculate the lateral error (difference between left and right distances) and correct the robot’s trajectory using proportional control.

Figure 1: Corridor following behavior. a) Flowchart of the corridor following algorithm; b) Robot behavior based on the computed lateral error e.

TASK 3

- Implement the corridor-following algorithm based on the flowchart displayed above.

- Test and tune the algorithm to find the optimal solution for corridor following. (You may use the

pid.hppfor advanced control if desired.)

Note: It is recommended to test corridor following in environments where the turns are not too sharp. This issue will be addressed in the next lab.

Lab 9 – Orientation-Aware Corridor Navigation

Responsible: Ing. Petr Šopák

Learning objectives

1) Understanding robot orientation using IMU (MPU6050)

- Interpreting raw gyroscope data

- Calibrating and integrating gyro values to estimate yaw

2) Extending corridor following with corner handling

- Detecting turns (e.g. 90° corners) using range sensors

- Executing rotation using IMU feedback

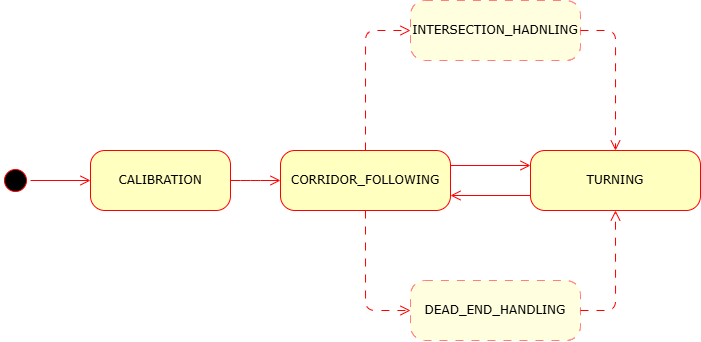

3) Implementing a state-based navigation strategy

- Designing a simple state machine

- Switching between corridor following and turning behavior

Introduction

In Lab 9, you implemented a basic reactive controller that allowed the robot to follow straight corridors using range sensors such as LiDAR or ultrasonic. However, this approach assumes that the path is straight and cannot handle corners or sharp turns.

In this lab, you will enhance that behavior by enabling your robot to detect and turn into new corridor directions (e.g., 90° left or right turns). To accomplish this, you will use an Inertial Measurement Unit (IMU) — specifically the MPU‑6050 (MPU‑6050 datasheet) — to estimate the robot's yaw (rotation around the vertical axis).

The robot will:

- Follow the corridor as before

- Detect the corner

- Rotate in place until it is aligned with the new corridor direction

- Resume forward motion

To implement this, you will also develop a simple finite state machine with at least two states: CORRIDOR_FOLLOWING and TURNING.

IMU and orientation estimation (Approx. 70 minutes)

The MPU6050 sensor provides raw data from a gyroscope and accelerometer. Unlike more advanced IMUs, it does not provide direct orientation estimates such as yaw, pitch, or roll.

To estimate yaw (rotation angle), you will:

- Read the raw gyroscope value for the z-axis (

gyro_z), which represents angular velocity around the vertical axis. - Calibrate:

- Keep the robot still for 2–5 seconds after startup.

- Collect multiple

gyro_zvalues. - Compute the average value as the

gyro_offset.

- Integrate over time:

- Subtract the offset from each reading.

- Multiply by the time delta (

dt) to obtain the yaw angle increment. - Accumulate this over time to estimate the current yaw:

yaw += (gyro_z - offset) * dt;

Practical Integration Tip

In this lab, you are required to implement the yaw integration yourself. No sensor fusion libraries will be used. Keep in mind that this method is sensitive to drift, so proper calibration is critical.

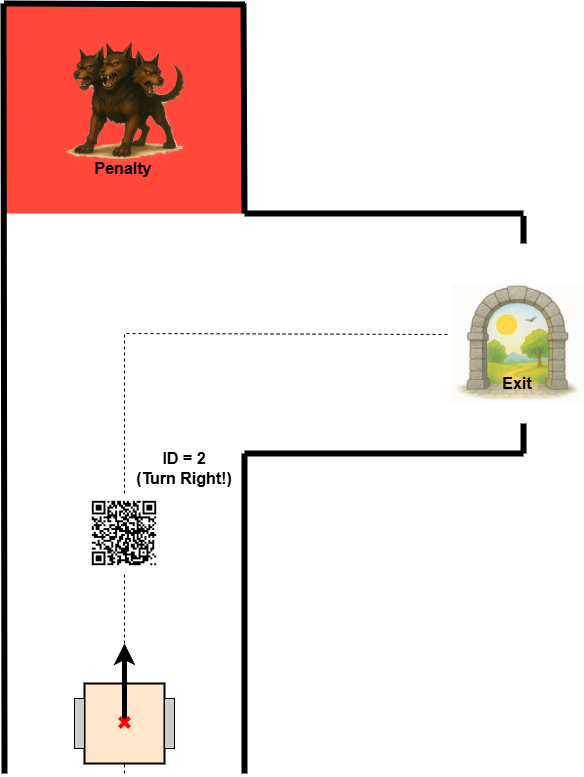

Corner Detection

When following a corridor, the robot can monitor the side range sensors. If a wall suddenly "disappears" on one side (i.e., the distance becomes much larger), and the front is also open, it likely means the corridor turns in that direction.

An alternative strategy is to detect a wall in front of the robot (i.e., front distance drops below a defined threshold), and then search for an opening on the sides to determine where the corridor continues. However, this method is problematic in case of intersections, as the robot may overshoot the corner and fail to turn properly.

TASK 1 – IMU Integration and Yaw Estimation

- Create a new ROS 2 node for the IMU (e.g., imu_node)

- Subscribe to the MPU6050 data and read

gyro_zvalues from the topic. A suggested node structure and a helper class are provided below this task - Implement gyroscope calibration:

- At the beginning of the program, keep the robot completely still for 2–5 seconds

- During this time, collect several

gyro_zvalues. - Compute the average of these samples to obtain the gyroscope offset

gyro_offset.

You will subtract this offset from all future gyro readings to reduce drift

- Estimate yaw (heading):

- In a timed loop, Subtract the

gyro_offsetfrom the currentgyro_zvalue to get the corrected angular velocity - Multiply the corrected value by the time delta

dtto get the yaw increment - Accumulate this increment into a variable yaw that represents the current robot orientation (the formula was described before)

- In a timed loop, Subtract the

- Test IMU-based yaw estimation and implement basic heading correction

- Manual Rotation test

- Calibrate the IMU and store the current yaw

- Pick up or gently rotate the robot by approximately 90° (by hand)

- The robot should detect the yaw error:

float yaw_error = yaw_ref - current_yaw; - If the error exceeds a threshold (e.g. 5°), apply a corrective rotation using differential motor speeds:

float correction = Kp * yaw_error; motor_node->set_motor_speed(127 - correction, 127 + correction); - The robot should rotate back toward its original orientation

- External Disturbance test

- While the robot is driving straight or standing still, apply a light push to rotate it

- The robot should detect the change in yaw and try to rotate back to its original heading based on the integrated yaw

Always calibrate the IMU at the beginning — without proper calibration, even small disturbances will cause significant drift over time!

- Manual Rotation test

Example of imu_node.hpp:

#include <rclcpp/rclcpp.hpp>

#include <sensor_msgs/msg/imu.hpp>

#include "algorithms/planar_imu_integrator.hpp"

namespace nodes {

enum class ImuNodeMode {

CALIBRATE,

INTEGRATE,

};

class ImuNode : public rclcpp::Node {

public:

ImuNode();

~ImuNode() override = default;

// Set the IMU mode

void setMode(ImuNodeMode mode);

// Get the current IMU mode

ImuNodeMode getMode();

// Get the results after integration

auto getIntegratedResults();

// Reset the class

void reset_imu();

private:

void calibrate();

void integrate();

ImuNodeMode mode = ImuNodeMode::INTEGRATE;

rclcpp::Subscription<sensor_msgs::msg::Imu>::SharedPtr imu_subscriber_;

algorithms::PlanarImuIntegrator planar_integrator_;

std::vector<float> gyro_calibration_samples_;

void on_imu_msg(const sensor_msgs::msg::Imu::SharedPtr msg);

};

}

To simplify your IMU logic, use a helper class planar_imu_integrator.hpp to encapsulate yaw estimation. If you later want to include velocity or position tracking, you’ll need to extend the structure. (Don't forget to write the tests)

#include <iostream>

#include <cmath>

#include <numeric>

namespace algorithms {

class PlanarImuIntegrator {

public:

PlanarImuIntegrator() : theta_(0.0f), gyro_offset_(0.0f) {}

// TODO: Call this regularly to integrate gyro_z over time

void update(float gyro_z, double dt);